Operating systems provide a User Interface (UI) for the user. User interface allows the user to control computers using input devices and uses output devices to give feedback such as displaying images and text in a screen.

There’s three types of user interface:

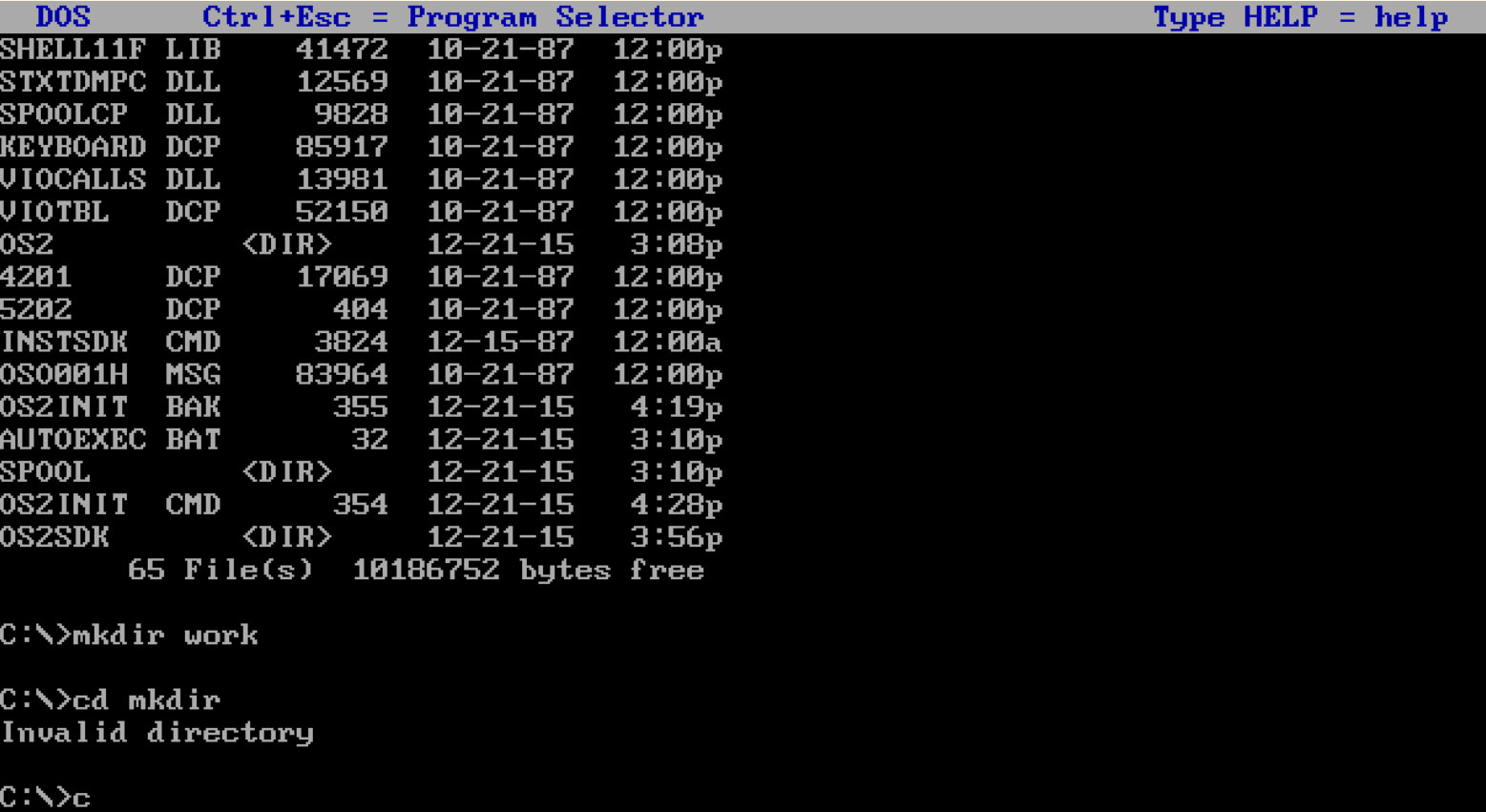

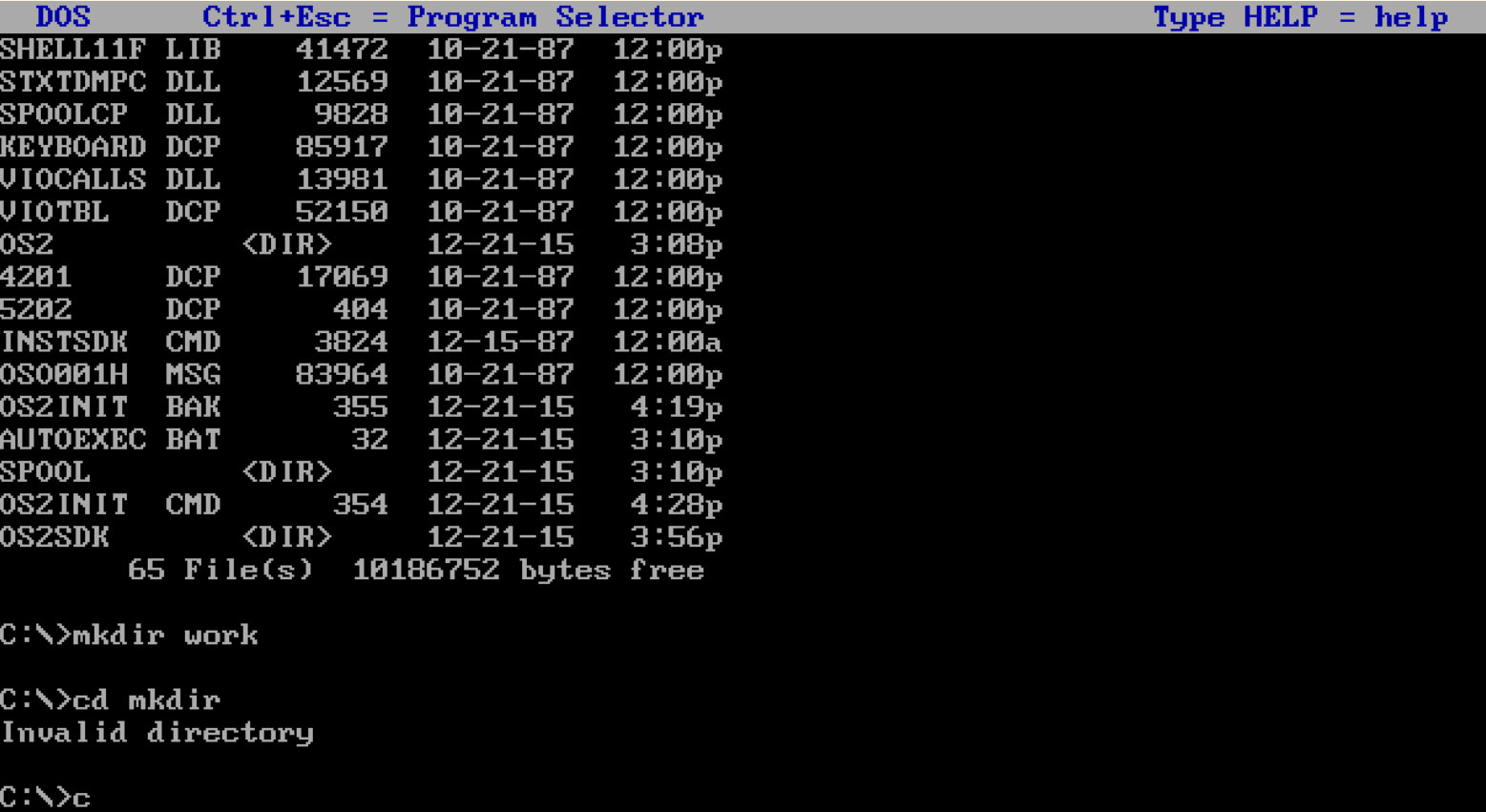

Command Line Interface (CLI)

A Commnd Line Interface is a more technical way of interacting with a computer. With a CLI the user types in commands using a keyboard order to control the computer.

In order to use a CLI the user must remember these commands, if they cannot remember the commands they will struggle to use the computer. It is because of this that it is much rarer to come across a computer using a CLI, and they are usually only used in specific situations or for specific applications.

However, despite CLIs being not recommended for novice users, they use a fraction of the resoruces that a Graphical User Inetrface requires.

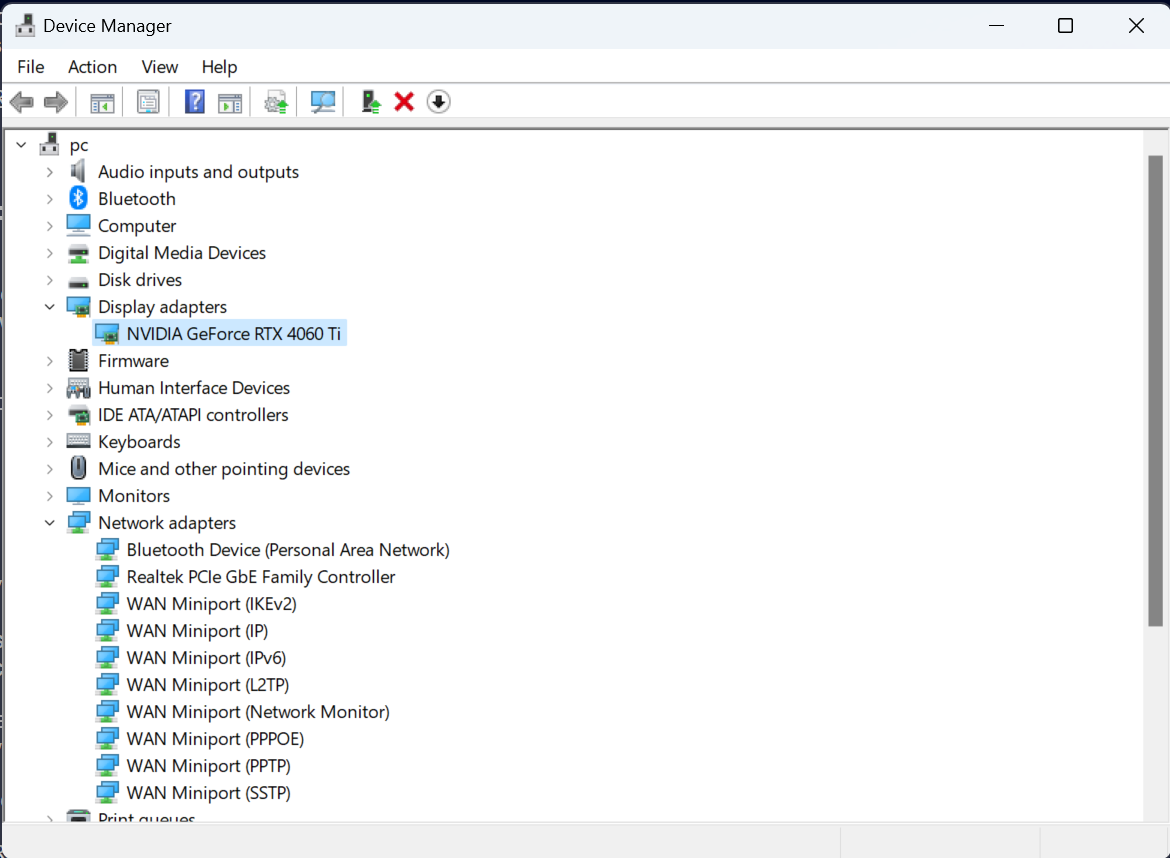

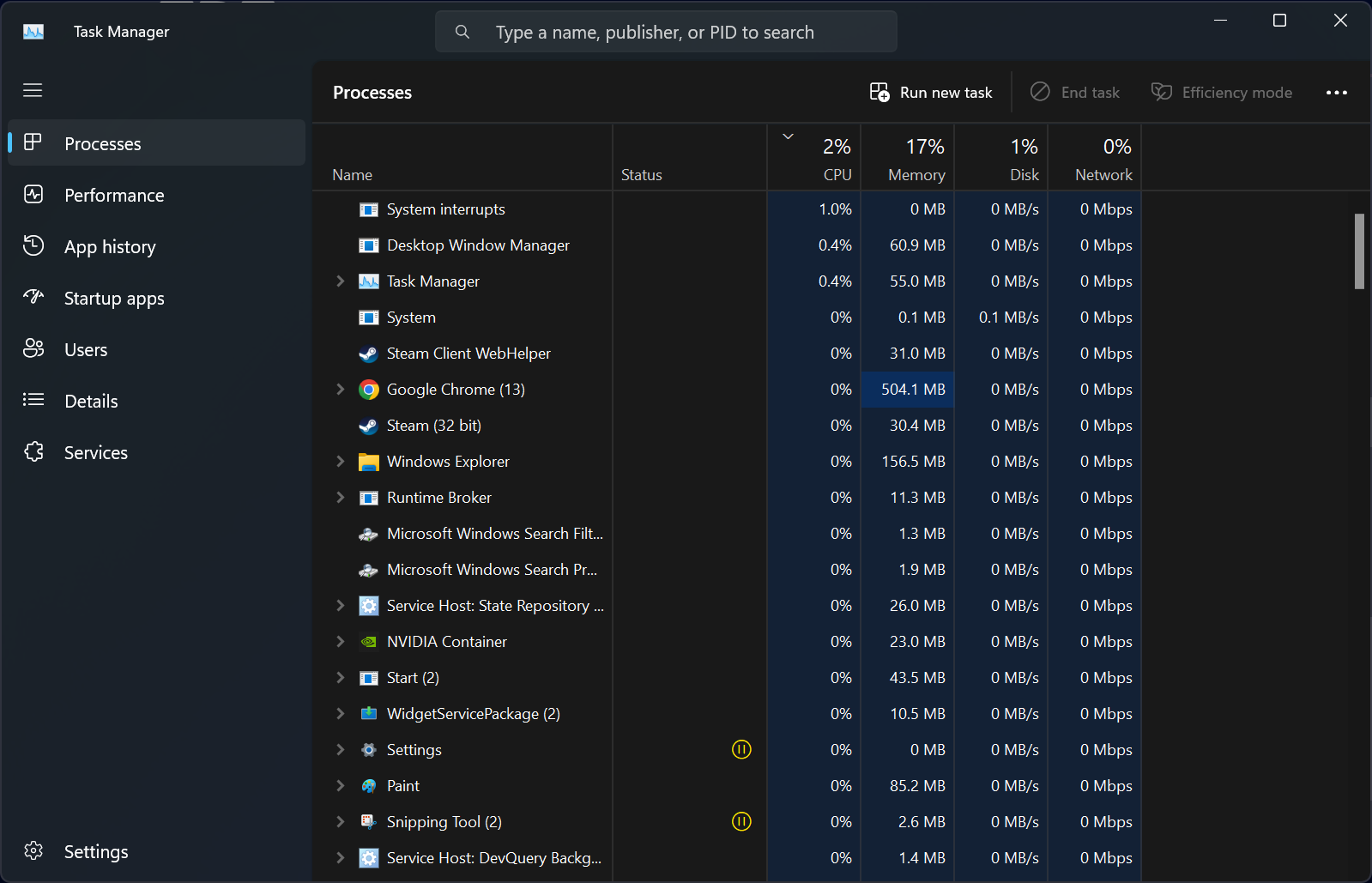

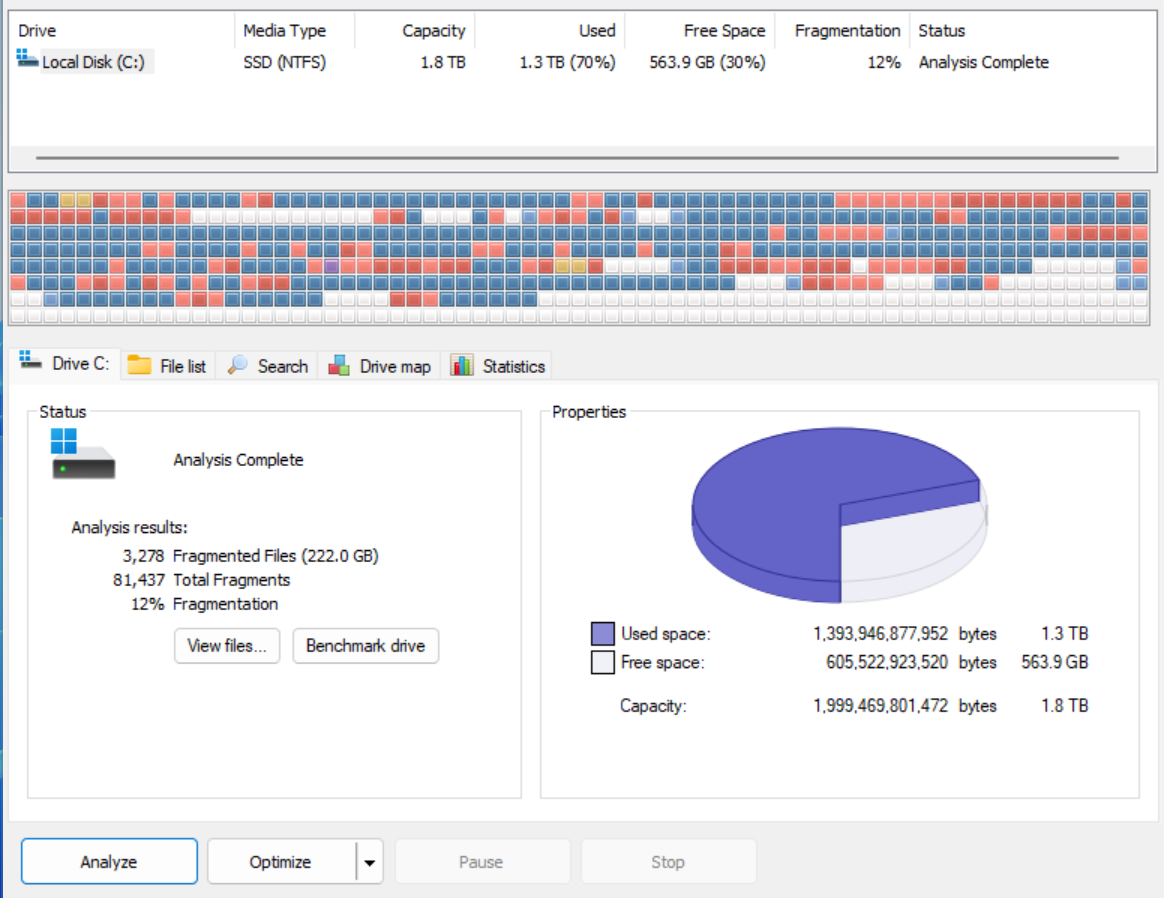

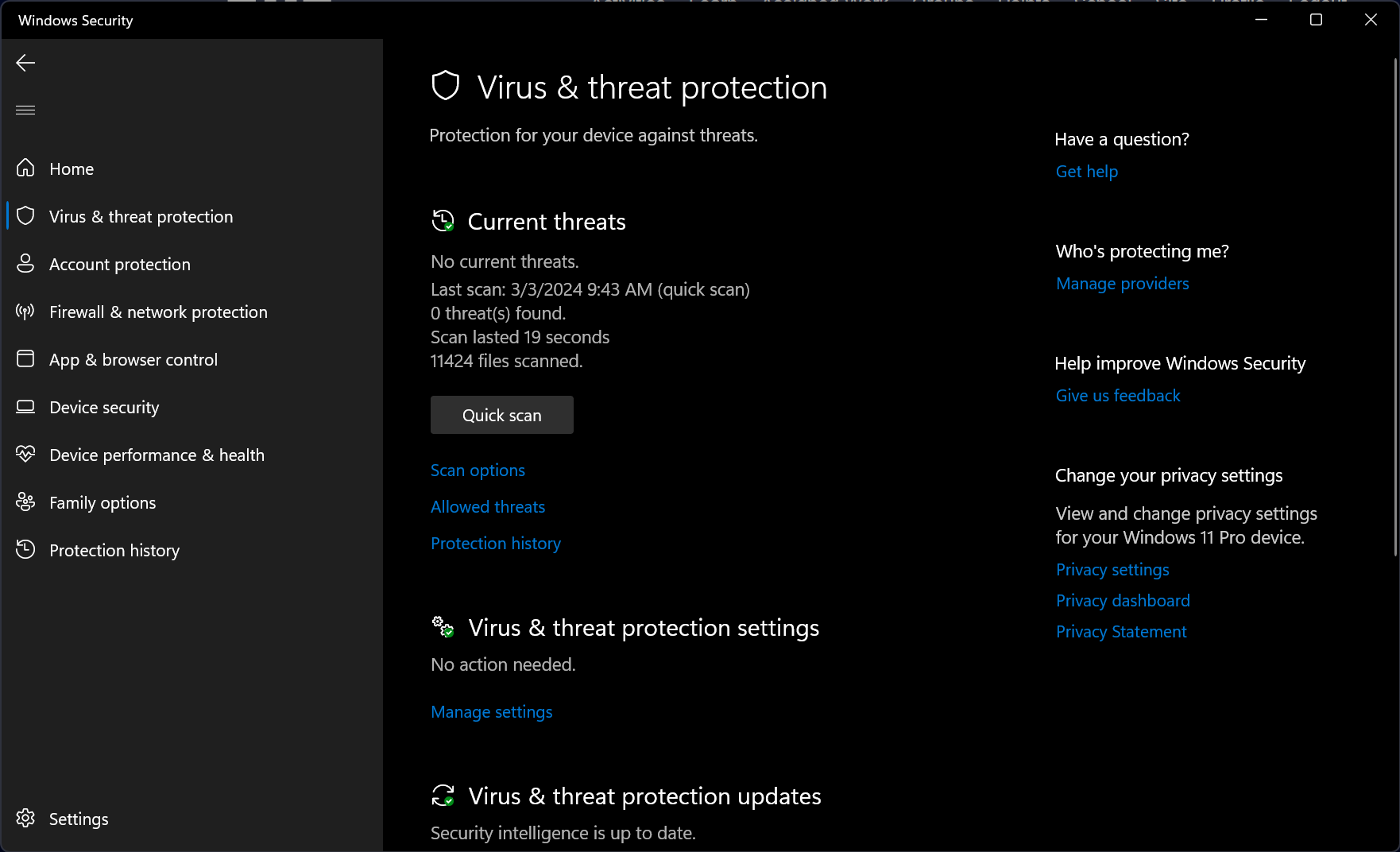

Graphics User Interface (GUI)

The Graphic User Interface is the most common user interface used on computers and can be found on Desktop Computers, Laptops, Smartphones and Tablets. The user interacts with the computer using a mouse or through a touch screen, to interact with graphics, icons and menus on the screen.

They are so widespread due to their ease of use, specifically because tasks can be completed visually. This allows the user to explore the features of the computer and use trial and error to complete tasks if necessary.

Graphic User Interfaces require powerful hardware to display the graphics. Most computers with a GUI will have a Graphics Processing Unit (GPU) to drive the graphics on the display.

Menu Interface

A menu interface is common in applications where ease of use is paramount. Menu interfaces are found in simple devices like self checkout devices, information help point and appliances.

To control a menu interface the user will navigate through a series of menu options which leads to further options, until the user finds what they need.